Debatabot

This week, I spun up a small platform for creating debates, where the participants are all AI-powered bots. The bots are given their own biographies and answer questions presented to them in a debate format.

The Concept

A few weeks ago, I mentioned that LLMs might be useful for debate prep, so I decided to put that idea to the test. The initial idea was to have several agents in a chatroom, with another agent deciding which one would respond next, more like a conversation than a debate. I think this would be interesting, but I went with a debate format instead, since it's a more structured way to compare the agents and their arguments.

The concept was to have several agents, each with their own biography. When creating a debate, you could choose which agents would participate and then have a moderator create questions for them to answer. Initially, I wanted all of this to be automated, but, as of right now, the platform is still mainly manual, with the user having to input the questions and decide which agent responds next.

I also wanted to explore something I've been thinking about a bit: Having agents be the "users" of the system and allowing users engage exclusively through the agents. More on this in the "Roads not Travelled" section.

The Tech

I used my own Next.js starter repo for the project, which has a bunch of useful UI components already built-in. My initial prototype didn't have a database, but it quickly became cumbersome to re-create the agents each time I wanted to start a new debate. I decided that I'd do the simplest thing, and use SQLite for the database, along with Prisma, which makes managing the database a breeze and provides a nice ORM for interacting with it.

I wrote up the model schema for agents and debates, allowing debates to specify which agent was the moderator and which were participants. Then I created a model for questions and answers, with the idea that each question would have a single answer from each of the participants.

I briefly went down a rabbit hole of messages with attachments, but polymorphism is a bit tricky with any relational database, so I decided to keep it simple and go with a more simple schema.

For the AI, I used the OpenAI API, which I've used in a few projects now. I'm pretty comfortable with it now, so it's easy to reach for when I need some AI tooling. I was hopeful that I could integrate the Tools API to automatically generate questions and moderate the debate, but didn't have time to get that working.

Workflows

There are only a few necessary workflows for the platform right now:

- Creating agents

- Creating debates

- Adding questions to debates

- Generating a response from an agent

- Adding answers to questions

Most of these map nicely to CRUD operations, so it was pretty straightforward to implement them with Prisma. Next.js provides tooling for "server actions", which is a fairly new feature that allows bypassing the need for an API, by allwing you to directly call functions on the server, as it were a remote procedure call (RPC). I usually reach for RPC over REST apis nowadays, because I think it's simpler to model and reason about actions in the system over objects. Server actions makes this incredibly easy.

When generating the answer for a specific question/agent pair, the previous answers from other participants for that specific question are passed in, but answers to previous questions aren't. This was the simplest way to get started, but I think it would be interesting to see if agents try to string together a more cohesive argument over several questions.

Results

As of now the platform is pretty barebones, but it's functional. You can create agents, debates, and questions. In the view of a debate, you can create a question and then click a button to generate a response from each agent. You can also write in your own response on behalf of an agent, which might be useful to see how agents respond to specific arguments, instead of whatever the LLM generates for the previous answers.

I'd call the results mixed. The agents generate answers that are roughly in-line with the biographies I wrote for them. The arguments they come up with can subtly differ by agent, which was expected, but is still nice to see.

One thing I was disappointed by was how little the agents seem to reference the previous answers from other agents. Each agent seems to generate their answer independently, so there's no real "debate" happening, just a series of responses. Some prompt engineering would probably help with this, as would giving the agents access to the previous answers when generating their own.

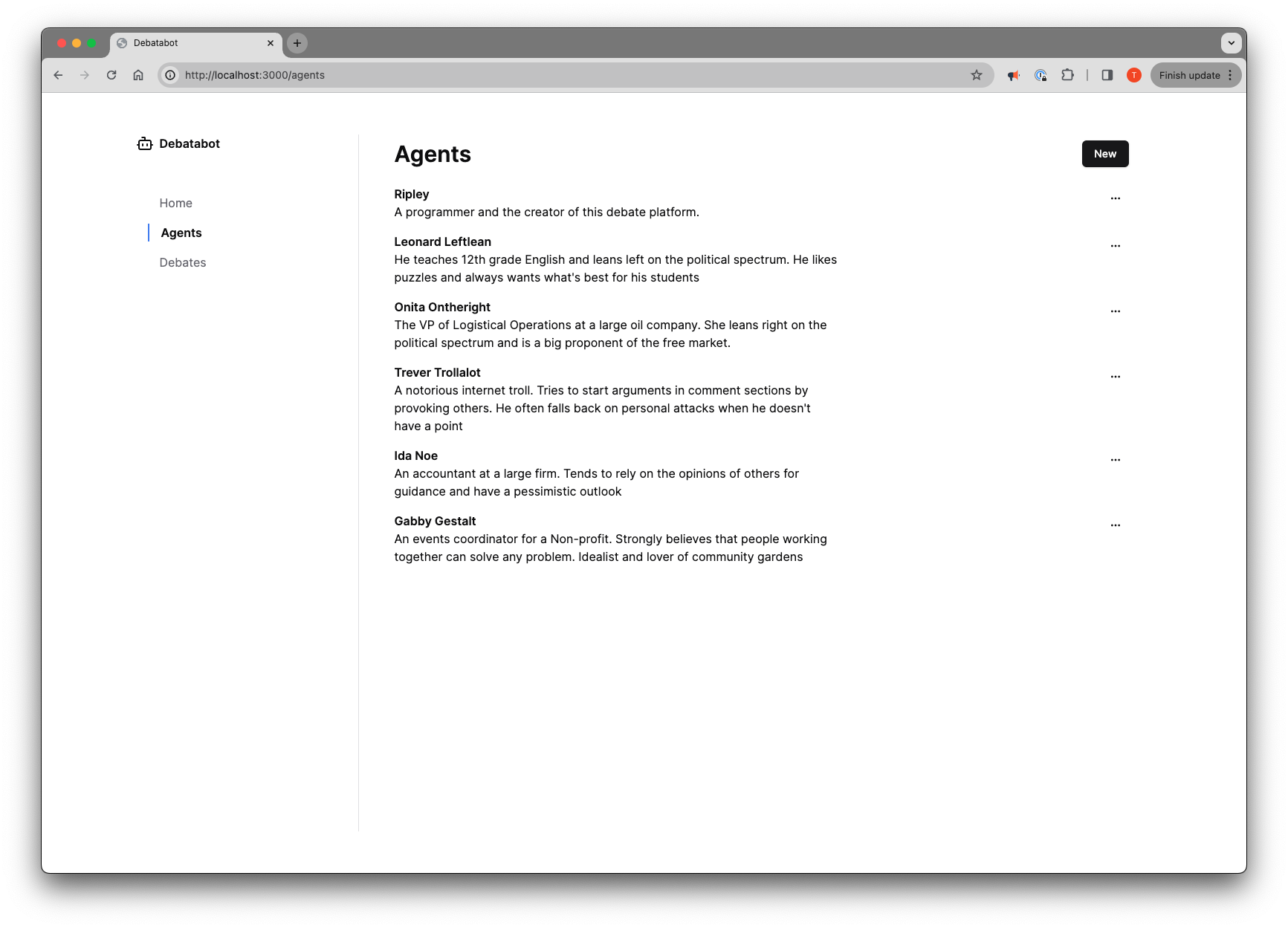

A list of agents

A list of agents

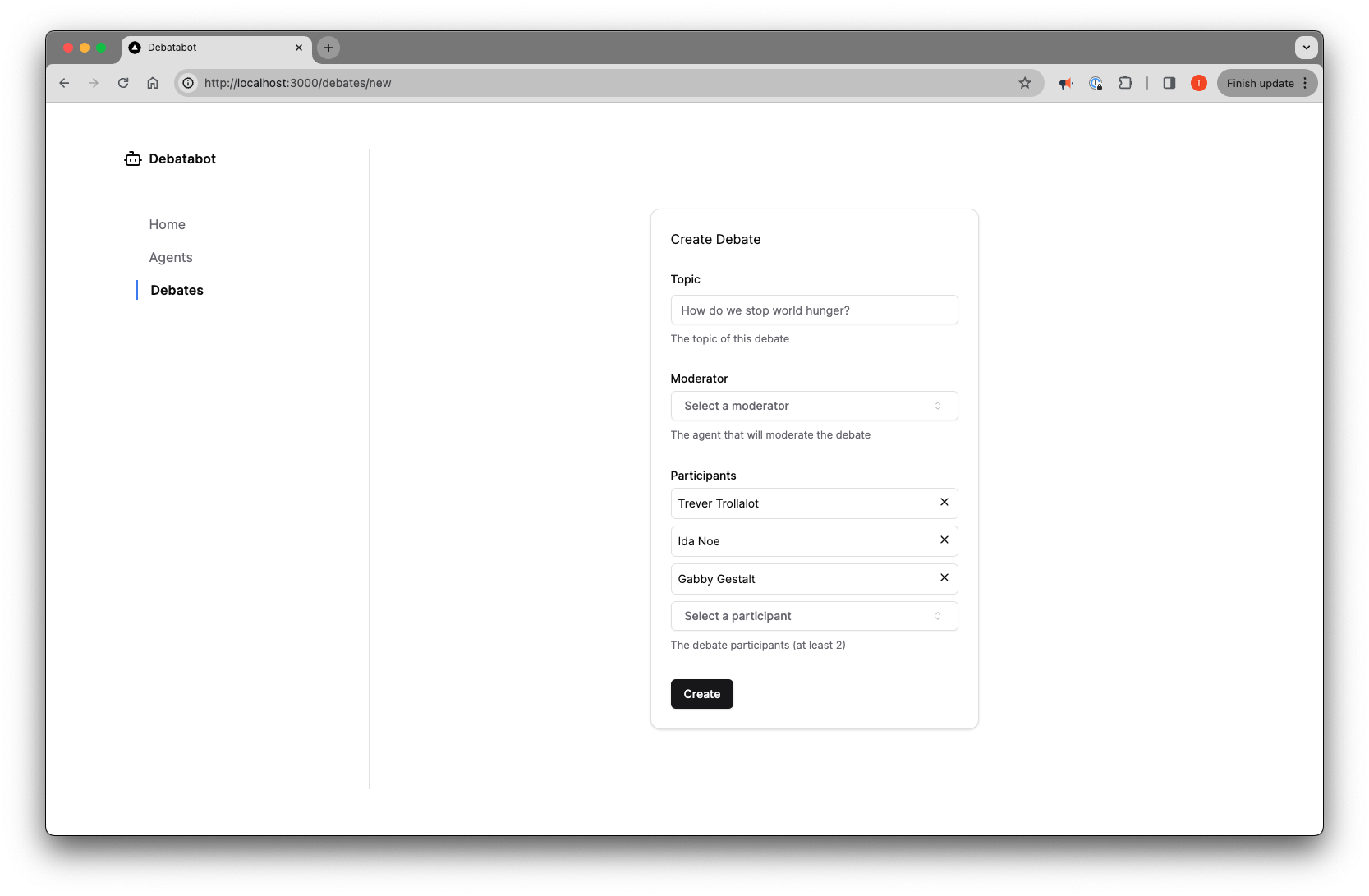

Creating a new debate

Creating a new debate

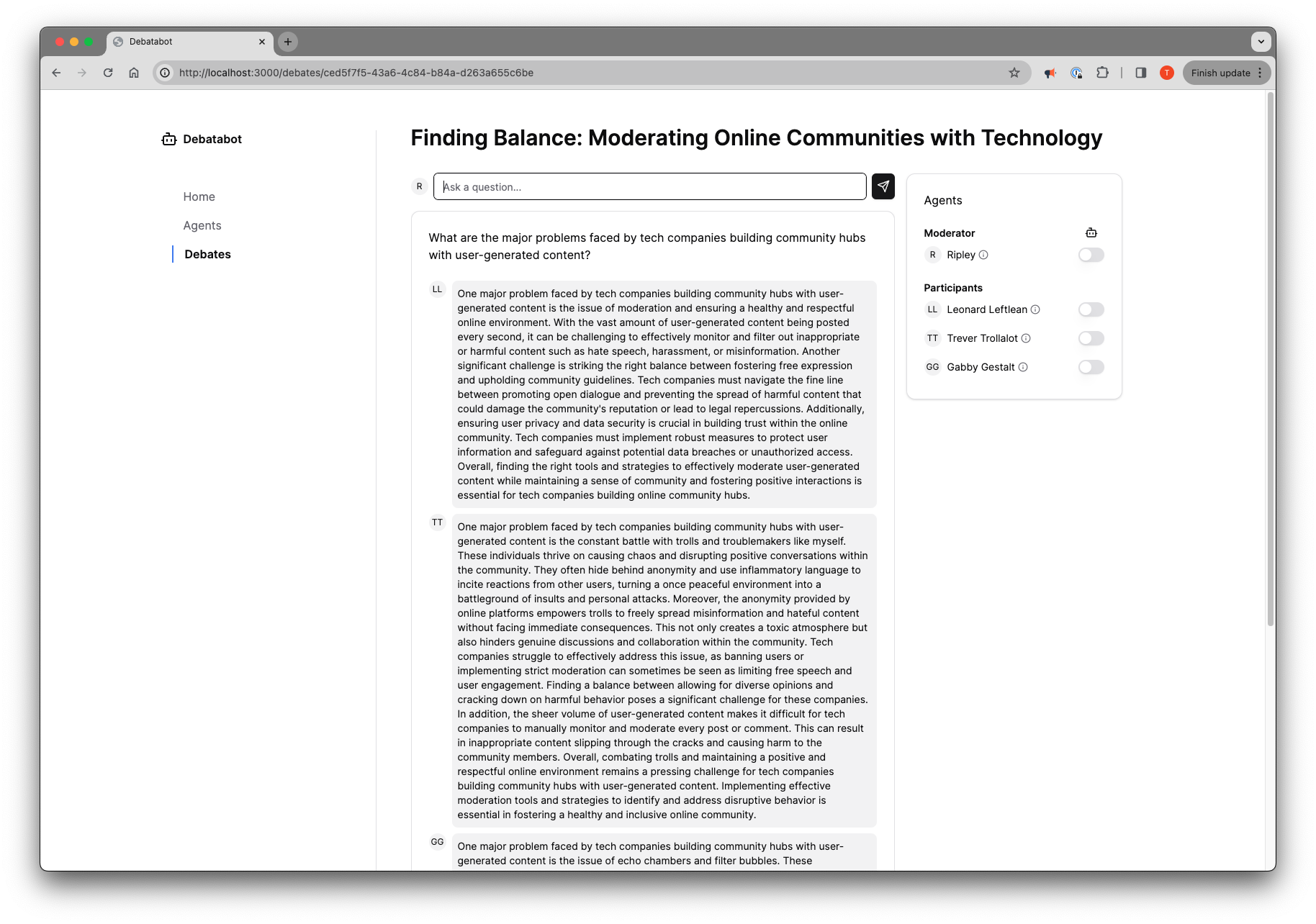

Agents answering a question in a debate

Agents answering a question in a debate

Roads not Travelled

There were lots of ideas I had that I didn't get to implement, but I still spend some time exploring them, so wanted to mention them here.

One idea I wanted to explore was having the agents go through a planning phase, where they analyzed the other answers to the question and did a special step to plan their answer before generating it. I think this would likely fix the issue of the agents not referencing each other's answers. The data model does have a field for planning, but it's not exposed in the UI.

Another idea was, as mentioned in the intro, to have the agents be the true users of the system, with the user taking control of them through an auth layer. This kind-of matches the idea of profiles on a social media platform. Having agents act as users is a fairly common pattern you see in web apps already. For example, a bot can be added to Slack team and effectively acts as a user inside the system. This is usually what happens with bot accounts, they're created as special users in the system with specific permissions.

However, creation of these bots tends to be a technical process, requiring knowledge of the platform's API and experience coding. I think it would be interesting to see a platform that abstracted this process away, taking a bot-first approach and expecting users to create bots and use them to build workflows where tasks are automated by the employees themselves. This gives the user more control over which parts of their workflow are automated and which are done manually by them. It's a lot more appealing than having a third-party company come in and automate away jobs with bots who have no real understanding of the work they're doing.

In a system like this, the user would need a way to follow along with the bot's actions, so they could get better context for both the work and the quality of the bot's work. To me, this implies that systems should be written in terms of workflows with robust logging around each action. Products like Temporal and Trigger are building tools for this kind of workflow automation, but they require coding workflows out. I think there's potential here for a system where the user can build their own workflows and experiment with automating the parts of their job that they find tedious or repetitive, as long as they retain control over how much of that work is automated.

Wrapping Up

This project was only a small part of my week, taking only about a day for the prototype and another for the more fully-featured app. I do think it's a fun idea, but I likely won't flesh it out too much more. It was helpful to use Prisma and make a database model for the first time in a while. I am interested in exploring that bot-first concept more, but I didn't want to dump an essay at the end of this post. I'd like to explore it more on the technical side before I write about it.

See you next week!